How the Guessing Game Started Listening Better

From Quick Scribbles to Meaningful Recall — Just Like We Do in Real Conversations

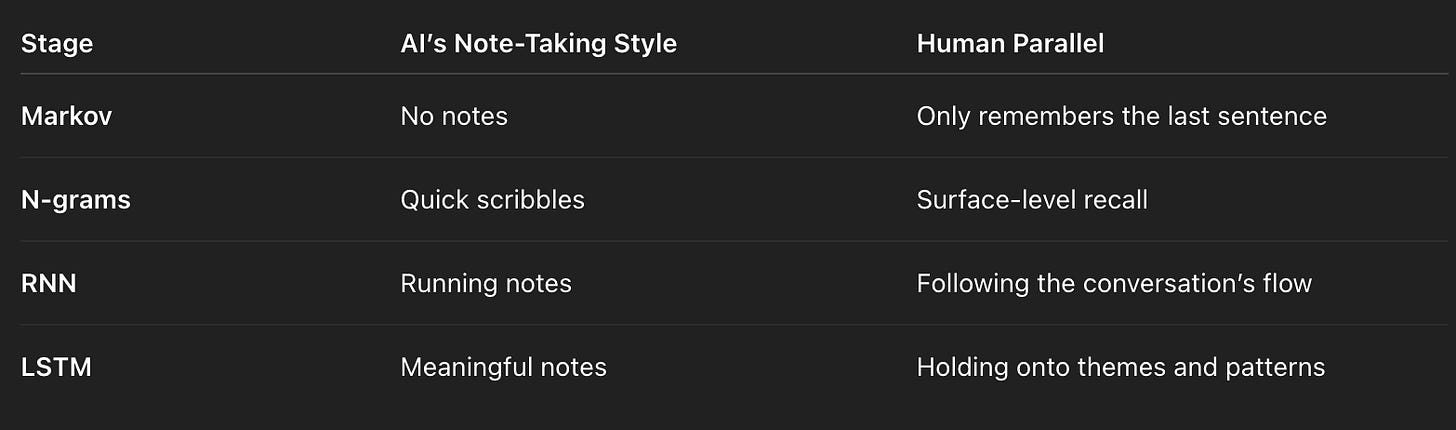

In the last chapter, our little Markov Chain friend could only remember one word at a time.

Great for simple patterns…

not great for following a real story or a meaningful conversation.

To make AI genuinely helpful, we needed it to develop something humans rely on all the time:

The ability to remember what came before — and notice what matters.

Not perfect memory.

Not total recall.

Just the kind of mental note-taking that helps people stay present, understand themes, and connect ideas over time.

Here’s how machines learned that skill — small tech detour ahead, but we promise to keep it human, clear, and nowhere near the scary kind of nerdy.

Step 1 - N-grams: “Quick Scribbles”

N-grams let AI remember a few words instead of just one —

like glancing at a small sticky note mid-conversation.

It helps with short, obvious conversational patterns like:

“I’m feeling really ___”

“This week has been kind of ___”

“I want to work on ___”

These quick-fill moments are easy because the clues are right next to the blank.

But the moment a conversation stretches beyond that tiny window, those little mental scribbles stop being enough.

Human parallel

Someone who remembers the last sentence you said…

but not the feeling or intention behind it.

They’re present, but only in the moment-to-moment surface of the conversation.

Good for basic coordination.

Not great for deeper work or insight.

As the conversation gets longer, those little scribbles can’t hold the bigger story.

So AI needed a better way to track meaning.

Step 2 - RNNs: “I’m Following the Thread”

Recurrent Neural Networks (RNNs) were the first time AI could keep a running sense of what was happening.

Instead of short scribbles, it started building a flowing mental model:

word after word

idea after idea

carrying the earlier parts into the later ones

AI wasn’t just reacting anymore.

It was following the arc.

Human parallel

Someone who stays with you through an extended conversation —

noting your shifts, your hesitations, your tone —

even if some details from the beginning soften around the edges.

They get the general thread, even if the nuances blur.

That was RNNs.

Capable of holding the conversation…

but prone to losing the early, important moments.

To go deeper, AI needed a way to protect what matters most.

Step 3 - LSTMs: “Keep the Meaningful Notes”

LSTMs (Long Short-Term Memory networks) added a powerful idea:

selective memory.

They didn’t treat every detail equally.

They learned to:

notice important signals

hold onto them

let go of the noise

This turned AI from “following the thread” into “tracking themes.”

Human parallel

Someone who unconsciously catches the deeper patterns in what you’re saying —

the repeated phrases, the concerns that keep resurfacing,

the emotional moments that matter more than the words themselves.

They don’t memorize everything.

They remember the things that shape the conversation.

That’s what LSTMs gave AI:

a sense of what’s meaningful, not just what’s recent.

This made AI far better at:

connecting ideas

noticing patterns

remembering the heart of the message, not just the wording

For years, this was the most “thoughtful” memory AI had.

From Scribbles to Sense-Making

Each step brought AI closer to how humans naturally track a meaningful conversation —

not by remembering everything, but by remembering what matters.

But even at their best, these models read in a strictly linear way:

Left to right.

One word after the next.

No jumping back.

No zooming out.

Humans don’t think like that.

We revisit earlier ideas, shift perspective, and connect distant moments quickly.

For AI to make the next leap, it needed a new superpower.

To understand how that happened, we need to step away from language for a moment… and visit a surprising branch of AI’s family tree.

Next Up: The Buff Cousin Who Hit the GPU Gym

Before GPT shows up, we take a detour to meet the cousin who spent YEARS staring at cat pictures, lifting math weights, and leveling up like a video game character.

Turns out: cats gave us supercomputers. 🐱⚡